Syntax Decisions

Twelve days have passed since my last update where I briefly introduced AIM. In this post, I’ll show you how AIM fits into the growing generative coding landscape and explain the design decisions that led me here.

As an engineer, I’ve always been drawn to simplifying experiences. Over time, I grew tired of complex builds, unwieldy frameworks, and the constant overhead of scaffolding just to do something simple—especially when working with AI.

I wanted to make a language and a system that would interpret it, so I wouldn’t have to constantly reinvent the wheel. Also, I wanted this language to integrate with existing tools and ecosystems—no one wants yet another locked-down platform.

"How are you building your AIs?"

"Plain text"

A Prompt-driven World

By now, you’ve probably used (or at least heard of) generative coding tools. Whether it’s Bolt, v0, Cursor, or something like Devin, the pattern is similar: you prompt the AI, it outputs code, and you refine until it’s right.

- Bolt and v0 operate as standalone products and let you prompt the system in a conversational way.

- Cursor and various IDE solutions integrate with LLMs to achieve similar functionality, but integrated in existing tooling like your IDE.

- Devin (and others like it) run fully autonomously, tying into your team’s codebase and communication platforms like Slack.

Programming has become less about cranking out lines of code and more about prompting. You select text, label it, and ask the system to do something. If it’s off, you keep prompting until it gets closer to what you need.

It struck me that prompting is essentially note-taking. If building software is turning into note-taking, we need a prompting-oriented language—something that reads naturally while still distinguishing between text and instructions.

View from my room in Kuala Lumpur where I wrote the first idea for AIM, November 2024

That’s the niche I see for AIM: a file-first, minimal-boilerplate way to orchestrate AI workflows, all within a Markdown-like structure.

Natural Language Programming

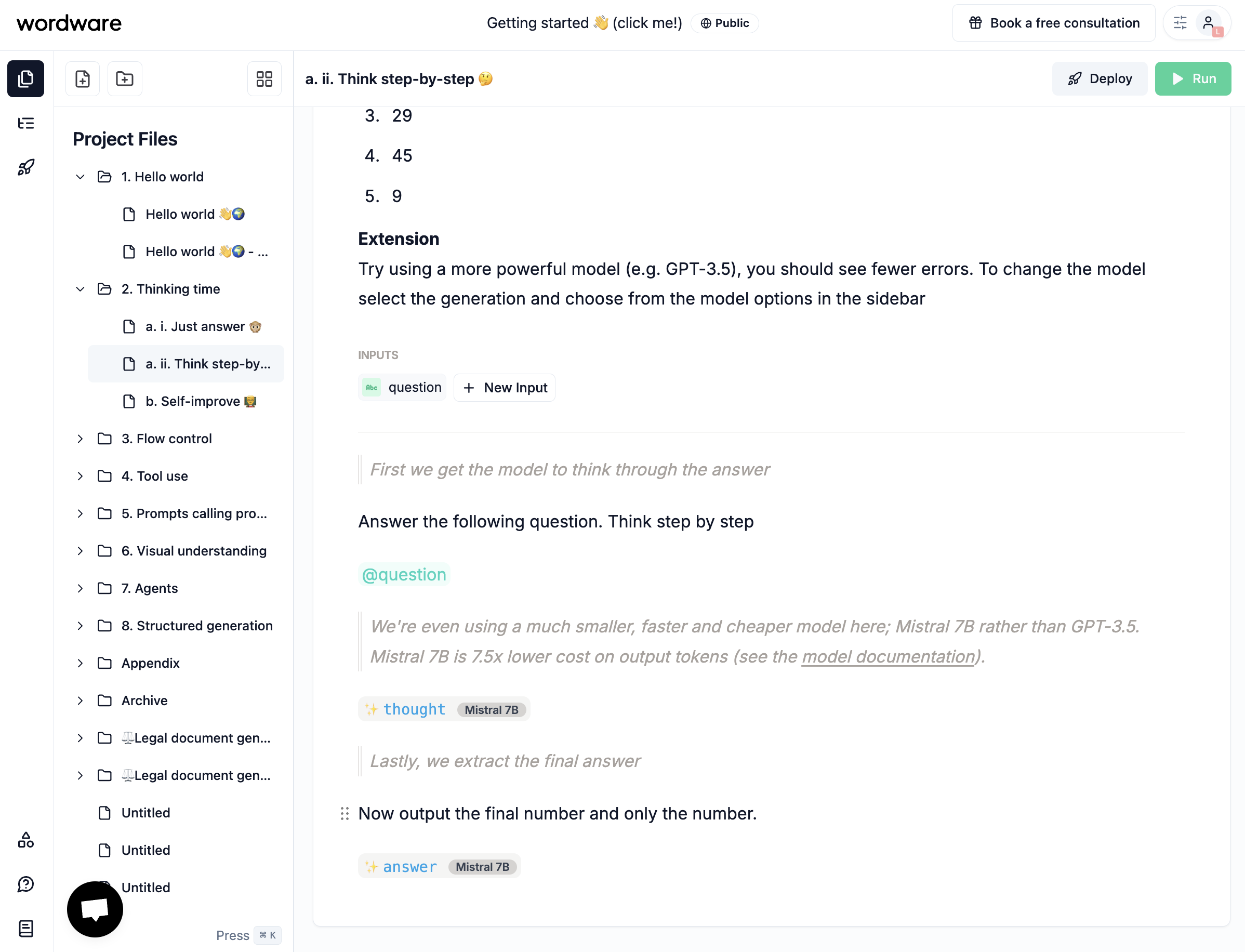

During my research, I came across Wordware, which emphasizes the idea of software as prompts. They have a web app that lets you write code in natural language, Notion-style. Each “wordapp” (their term) is a collection of prompts that run in either the browser or on a server. Effectively, when you build a wordapp, you’re building a cross-platform application.

I love the concept, but Wordware itself is proprietary and relatively closed. I really wanted something that I could author anywhere, in a simple file-based setup rather than being tied to a single web app.

Software programming is simply a way to express ideas, instructions, and concepts in a format the computer understands. It’s a means to the end goal—solving problems.

Solving problems!

Are software developers the only people who can solve problems? Of course not. They’re good at solving computer problems, but in many cases, they are given problems by people who don’t know how to solve them.

Developers are basically translators. They take a problem from someone (or themselves) and break it down into computer instructions. But problem-solving is a team effort, so software development should be too.

In an ideal scenario, you’d just write:

"Do this, do that, do the other thing"

The computer would interpret it and make it happen. That’s essentially prompt-driven development.

But if we’re building bigger systems with agentic workflows, we need reproducibility, testability, version control, collaboration. We can try to rely on writing in pure natural language and convert that to code, or let a system to interpret it, but in my opinion, it’s a bit early for large-scale, complex projects to rely solely on these approaches.

On top of that, we should try to accommodate less-technical collaborators in our workflows, bridging the gap between them and traditional developers. Plain text isn’t ideal for all scenarios. We need a bit more structure and a way to differentiate between instructions and normal language.

This is where Markdown shines. It's simple, easy to read, and supports just enough structure. Having been around forever (and personally using it for more than a decade), it's widely regarded as the best option for keeping documents both simple and readable.

If I were to compare Markdown to other languages vs plain text, I think Markdown leans more towards plain text.

There are other Markup languages, but it seems that the general consensus is that Markdown is the best option for keeping things simple and readable. I compared Markdown to other Markup languages to see how it compares.

Having decided that Markdown was a strong starting point, I looked into different ways to extend it:

At first, I tried to use directives for something straightforward -- like querying an LLM and outputting the result.

give me the different options for the syntax

::ai{#decisions model=”openai/o1”}

$decisions

It seemed intuitive until I realized how messy it gets when you start nesting directives:

:::::container

::::container2

:::container3

::ai{#decisions model=”openai/o1”}

:::

::::

:::::

I ended up having two options: Markdoc and MDX.

I love MDX and have used it several times. It’s powerful because it lets you interleave arbitrary JavaScript—including React components—directly into your Markdown. Think of it as “docs as code.” However, this can also lead to complex or code-heavy content. MDX does not enforce a clear boundary between text and logic and doesn't fundamentally rethink how to express program logic.

It's quite hard to tell what's text and what's logic. In this case, the input is a variable that's passed to the LLM and when parsing the file, the input is interpreted as a variable.

By contrast, Markdoc enforces a cleaner boundary between text and logic—“docs as data.” It uses a fully declarative approach to composition and flow control, which helps maintain clear, well-structured content. With MDX, you get more freedom and flexibility, but the trade-off is that your documents can become as complicated as regular code, hurting maintainability and making the authoring process more cumbersome.

Then I stumbled across a blog post by John Gruber discussing some of Markdown’s original syntax decisions. His point was simple but vital: don’t lose Markdown’s simplicity, or you risk throwing away the very benefits that made it so appealing in the first place.

Markdoc is Stripe’s own content authoring system, implementing a rich superset of Markdown, and released this week as an open source project. It looks wonderful. I love their syntax extensions — very true to the spirit of Markdown. They use curly braces for their extensions; I’m not sure I ever made this clear, publicly, but I avoided using curly braces in Markdown itself — even though they are very tempting characters — to unofficially reserve them for implementation-specific extensions. Markdoc’s extensive use of curly braces for its syntax is exactly the sort of thing I was thinking about.

Reading that post reaffirmed the direction I was heading in. I spent the next few days diving into Markdoc’s documentation to see how it:

- Enforces structure and keeps content and code logically separate

- Remains true to Markdown while allowing “richer nodes” when needed.

It seemed to echo my own goals: keep Markdown at the core, but allow for a richer set of directives or “nodes” when we really need them.

Based on pure gut feeling, this is where I would place Markdoc, and AIM's Markdown flavour (based on Markdoc) now.

I also want to add that MDX might be something I will explore in the future, but for now, I think Markdoc is the best option and I had to make a decision. Maybe adding a tag to indicate that a snippet is MDX or something would be a good compromise.

AIM in a Nutshell

AIM stands for “AI Markup Language,” a specialized superset of Markdown that keeps the simplicity while letting you do complex AI tasks natively. Here’s a minimal example:

---

input:

- city: string

description: "Name of the city"

---

What's the weather in {% $input.city %}?

{% ai #weather model="openai/o1" /%}

{% $weather.result %}

- The frontmatter section at the top declares external inputs.

- Text can reference those inputs using the

{% $input.city %}syntax. - The

{% ai #weather model="openai/o1" /%}directive runs the AI workflow. - The result is stored in the

$weather.resultvariable.

This is how AIM stays close to Markdown but gives you a clear syntax for AI operations.

Markdown-Like, But Not Exactly

AIM extends Markdown with some additional directives for AI interactions. That’s why it might not “look like Markdown,” but it’s still human-readable and easy to learn.

Now let's see how AIM compares to JavaScript in a practical example. Both achieve the same result, but notice how AIM's declarative syntax eliminates boilerplate and lets you focus purely on describing what you want to accomplish.

- AIM

- JavaScript (Langchain)

- JavaScript (AI-SDK)

---

input:

- name: string

description: "Name of the person"

- details: string

description: "Details about the person"

---

Research this person: {% $frontmatter.input.name %}

Details: {% $frontmatter.input.details %}

{% ai #research model="perplexity/sonar" /%}

Write a copy to convince them to use Natural Language for AI Agents.

{% ai #benefits model="anthropic/claude-3.5-sonnet" /%}

Write a poem about them and why using AIM makes sense.

{% ai #poem model="openai/text-davinci-003" /%}

{% $poem.result %}

const { PromptTemplate, LLMChain } = require('langchain');

const { OpenAI } = require('langchain/llms/openai');

const { Claude } = require('langchain/llms/claude');

const { Sonar } = require('langchain/llms/sonar');

const llmSonar = new Sonar({ modelName: 'perplexity-sonar' });

const llmClaude = new Claude({

modelName: 'claude-sonnet-3.5',

useStructuredGenerations: true,

});

const llmOpenAI = new OpenAI({

modelName: 'text-davinci-003',

temperature: 0.7,

});

const researchPrompt = new PromptTemplate({

inputVariables: ['name', 'details'],

template: `

Research this person: {name}

Details: {details}

[research]

`,

});

const benefitsPrompt = new PromptTemplate({

inputVariables: ['name'],

template: `

Now, write a copy to personally convince {name} to start using Natural Language to create their AI Agents utilizing a product called Wordware.

Underline the benefits of using AI to automate their work.

[benefits]

`,

});

const poemPrompt = new PromptTemplate({

inputVariables: ['name'],

template: `

Write a poem which will underline how amazing {name} is and why using a product like Wordware makes sense for them.

[poem]

`,

});

async function generateOutputs() {

const researchChain = new LLMChain({ llm: llmSonar, prompt: researchPrompt });

const benefitsChain = new LLMChain({

llm: llmClaude,

prompt: benefitsPrompt,

});

const poemChain = new LLMChain({ llm: llmOpenAI, prompt: poemPrompt });

const inputs = {

name: 'John Doe',

details: 'Tech entrepreneur focused on AI tools',

};

const researchOutput = await researchChain.run(inputs);

const benefitsOutput = await benefitsChain.run({ name: inputs.name });

const poemOutput = await poemChain.run({ name: inputs.name });

console.log('Research Output:', researchOutput);

console.log('Benefits Output:', benefitsOutput);

console.log('Poem Output:', poemOutput);

}

generateOutputs();

import { generateText } from 'ai';

import { openai } from '@ai-sdk/openai';

import { anthropic } from '@ai-sdk/anthropic';

import { sonar } from '@ai-sdk/sonar';

const llmSonar = sonar('perplexity-sonar');

const llmClaude = anthropic('claude-sonnet-3.5');

const llmOpenAI = openai('text-davinci-003');

const researchPrompt = ({ name, details }) => `

Research this person: ${name}

Details: ${details}

[research]

`;

const benefitsPrompt = ({ name }) => `

Now, write a copy to personally convince ${name} to start using Natural Language to create their AI Agents utilizing a product called Wordware.

Underline the benefits of using AI to automate their work.

[benefits]

`;

const poemPrompt = ({ name }) => `

Write a poem which will underline how amazing ${name} is and why using a product like Wordware makes sense for them.

[poem]

`;

async function generateOutputs() {

const inputs = {

name: 'John Doe',

details: 'Tech entrepreneur focused on AI tools',

};

try {

const researchOutput = await generateText({

model: llmSonar,

prompt: researchPrompt(inputs),

});

const benefitsOutput = await generateText({

model: llmClaude,

prompt: benefitsPrompt(inputs),

});

const poemOutput = await generateText({

model: llmOpenAI,

prompt: poemPrompt(inputs),

});

console.log('Research Output:', researchOutput);

console.log('Benefits Output:', benefitsOutput);

console.log('Poem Output:', poemOutput);

} catch (error) {

console.error('Error generating outputs:', error);

}

}

generateOutputs();

Both snippets achieve the same goal—researching a person, writing persuasive copy, and generating a poem. However, the AIM version provides a more declarative approach, letting you focus on what needs to be done rather than how to implement it. The JavaScript example, while familiar to many developers, requires additional imports, function calls, and boilerplate to orchestrate the AI workflows.

By streamlining these tasks into a file-first format and handling AI prompts natively, AIM cuts down on the overhead code and token usage. In this example, JavaScript (Langchain) weighs in at 480 tokens and the AI-SDK version weighs in at 377 tokens, while AIM comes in at just 145 tokens — around a 2.6 to 3.3x improvement in terms of verbosity for common libraries.

That leaner footprint translates to lower costs and a faster route to building dynamic, AI-driven solutions. This is simple example, but the goal is to make AIM address common AI patterns and workflows and make it easier to use.

Moreover, AIM’s syntax is easier to extend and maintain as your project grows. Rather than scattering prompt templates across multiple files or frameworks, you keep all logic in one concise place.

For token counts, I used the OpenAI GPT4o token calculator.

What's next for AIM?

- Open Sourcing - A public repo is in the works. Once released, you'll be able to view all the internals: how

.aimfiles are compiled, run and served. - Technical Documentation - I'm working on a comprehensive guide to help you understand how AIM works and how to use it.

- Developer Tooling - A command line interface for AIM for running and serving files and an SDK for AIM integration into existing projects.

- Cookbooks - A collection of recipes for common use cases.

And hopefully a lot more... In the meantime, you can take a look at a more detailed syntax overview here.

Final Thoughts

By reducing boilerplate and making it easier to connect to an LLM, you streamline development and make the solution more accessible and modular. Every solution should boil down to a file—or a collection of files—written in a format as close to natural language as possible.

- Lower barries to entry - non-technical users can use it and easily create solutions

- Simplicity and future-proofing - You can swap LLMs without fuss, and you are not locked into any single app

- Enduring value - As Kepano says "the files you create are more important than the tools you use to create them", so it's best to keep them in an open, portable format.

LLMs are fast becoming the "CPUs" of modern problem-solving, and file-first approach safeguards us from ever being trapped by today's software platforms. Beyond that, this approach naturally lends itself to "coding agents" because:

- LLMs produce text in Markdown, which already enhances readibility, formatting and structure.

- It cuts down token usage significantly - sometimes by up to 3x, making the resulting AI-driven solutions both more efficient and cost-effective.

But the biggest reason for me is empowering humans. We want people to stay in control of how they solve problems, using their own language without fear of vendor lock-in. I'm convinced that there's practically infinite demand for software, and AIM should let everyone contribute without being stuck in a single platform's ecosystem.

Perfection is achieved not when there is nothing more to add, but when there is nothing left to take away.

Antoine de Saint-Exupéry